Introduction:

Over the course of a few weeks, our class undertook three different Unmanned Aerial System (UAS) experiments utilizing a balloon, a rotary wing craft, and experimenting with small rockets in order to collect data and aerial images. These operations familiarized us with the basic steps and processes involved in carrying out a UAS mission. They also provided us with some aerial images to compile and mosaic.

The reason there are so many options for conducting UAS experiments is twofold. First, each different weather condition presents its own unique challenges. For example, when enough wind is present something like a kite can work, but with too little or too much wind, it is not an option. Second, UAS is expanding rapidly as the realization of their capabilities are realized. There is a lot of room for new ideas if someone is willing to carry out the experimentation. An example of this will be seen in this exercise. Dr. Hupy sees potential for the use of rockets to capture a quick, relatively inexpensive view of a defined area.

These exercises were just as much about understanding the state and potential of the UAS field as it was about seeing some current methods in operation and turning images captured by UAS into visible data.

Study Area:

The Eau Claire Soccer Park (as seen in figure 1) is a relatively wide-open area suitable for engaging in UAS experiments without posing a risk to or concern for civilians. Our base of operations was located at the pavilion in the middle of the field.

Figure 1: This aerial image shows the Eau Claire Soccer Park where are UAS experiments were undertaken

Methods:

Fixed-Wing UAS

Our initial UAS experiment utilized a rotary-wing UAS (as seen in figure 2) with the ability to travel approx. 5m/sec with a battery life of 15 minutes. This craft was outfitted with a Canon digital camera to capture digital images.

Figure 2: This is the rotary wing UAS equipped with a digital camera used to carry out a flight experiment along with operator controls.

Obviously, the more electronics involved with your UAS the greater detail that goes into pre-flight preparation. You don't simply pull your aircraft out and send it up into the air. Dr. Hupy walked us through the steps that must be executed before flight operation take place. These step are as follows:

Ensure batteries are fully charged (max flight time is only 15 minutes)

Check transmission between aircraft, controls, and monitoring equipment

Understand the weather forecast

Understand the terrain in the mission area as well as surrounding areas

Elevated features, either natural or man-made, present flight challenges that must be accounted for

ideal average altitude for UAS flights is approx. 100 ft. above objects below, buffers should be planned accordingly

Use a survey grid to ensure there is enough imagery scan overlap

Build a command sequence to guide UAS flight including intended loiter points, overall flight plan, and landing points

Ideally, UAS missions with this equipment should be a two-man operation. One person acts as an engineer (as seen in Figure 3) and monitors the flight path and battery life as displayed in the computer where information is being transmitted from the UAS. Another person acts as a pilot at the controls of the UAS (as seen in Figure 4). While a flight sequence is being executed by the UAS, you must still be prepared to adjust in the face of unknowns or emergencies.

Figures 3 and 4 show examples of an engineer and pilot, respectively, carrying out a UAS mission

Once these pre-flight steps and in-flight instructions were made clear and carried out, the pilot and engineer carried out a flight mission. A designated route was followed covering a portion the Eau Claire Soccer Park with the UAS (as seen in Figure, in effect snaking across the fields with a final return to land near our base of operations at the pavilion.

Figure 5: The route plan of the UAS is shown (snaking across the soccer park) on the laptop utilizing mission plan software. On the left you can see the direct view in front of the UAS as well as various logistical data

During the mission it is a good idea to start guiding the UAS to a landing point at about 60% battery life. With expensive equipment, it is not a good idea to push the limits and risk potential loss or destruction.

In addition to potential harm to the equipment, mission operators should ensure the aircraft has been shut off before attempting to retrieve it to avoid potential bodily harm.

Rockets

Dr. Hupy had been eager to attempt capturing aerial imagery with a small video camera attached to a rocket (as seen in figure 6). We had attempted this one time before without success. The first rocket we tried was not a success due to "mechanical" failure. The rocket fins detached prematurely and the parachute never deployed. As a result, the rocket came to a crash-landing in the parking lot.

Figure 6: This mini rocket is equipped with a small video camera on the right to capture digital imagery

Undeterred, our professor launched a second rocket (as seen in Figure 6), this time with success.

Figure 7: A second mini rocket was launched successfully after the first one failed

Balloon

The other method tested during our sessions at the Eau Claire Soccer Park was using a large helium-filled balloon (as seen in figure 8) equipped with two digital cameras (as seen in figure 9). With little wind to speak of, and subsiding cold temperatures, conditions were rather ideal for using the balloon.

Figure 8: A balloon is inflated; it will act as the UAS for capturing digital imagery

Figure 9: Two digital cameras were attached to the balloon to capture digital imagery on the ground beneath

Each camera was set to take pictures at 5-second intervals. One simply collected images while the other collected images and geospatial information.

In order to capture the area, the line was let out to about 500 ft., and the balloon was physically walked around the field in a similar snake-like pattern implemented when using the rotary-wing UAS. This was done in order to ensure complete coverage of our study area. At first, I thought this would be a problem with people constantly beneath, but the wind had picked up by then and the balloon was rarely, if ever, directly over the person at the end of the balloon.

Figure 10: This photo shows the balloon with two digital cameras attached beneath

As opposed to imagery captured with the other two methods, imagery from the balloon launch was used to build a mosaic (singe image) seamlessly displaying the entire study area captured during the mission.

Mosaic

Because this was a relatively new process to most of the students, we were charged with exploring different options. We relied heavily on Drew Briske, due to his previous experience with PhotoScan to help guide us through this process. Ultimately, GeoSetter and PhotoScan software were utilized to build a mosaic of the hundreds of digital images that were captured during the balloon launch.

Because of the preciseness of Drew's directions they are included for both PhotoScan and GeoSetter

Photoscan

First, we had to narrow down the images to include only those that would yield a clear and accurate representation of our study arrow. Photoscan was used to build digital elevation models. We decided to use approximately 200 images for our purposes; this would result in very high quality images but would take a significant amount of time to mosaic. Images from one camera were geotagged with latitude and longitude while images from the second camera were not.

PhotoScan Directions

- On the Tab list click Workflow

- Click Add Photos (only use the photos you want, if to many are used (~200) the process will take hours to complete)

- Add the photos you want to stitch together

- Once the photos are added go back to the Workflow tab and click Align Photos. This creates a Point Cloud, which is similar to LiDAR data.

- After the photos are aligned in the Workflow tab, click Build Mesh. This creates a Triangular Integrated Network (TIN) from the Point Cloud.

- After the TIN is created from the mesh, under Workflow click Create the Texture. Nothing will happen or appear different until you turn on the texture.

- Under the Tabs there will be a bunch of icons, some of them will be turned on all ready, but look for the one called Texture. Click on it to turn it on.

- If you want you can turn off the blue squares by clicking on the Camera icon.

- In order to export the image to use it in other programs; Under File, click Export Orthophoto. You can save it as a JPEG/TIFF/PNG. It's best to save it as a TIFF.

- With the photo exported as a TIFF, open ArcMap and bring in the TIFF photo and bring a satellite photo of Eau Claire or use the World Imagery base map.

- You will only need to Georeference the photos if the images you are using were not Geotagged. Open the Geoprocessing Tool-set.

- Click on the Viewer icon. The button with the magnifying glass on. This will open a separate viewer with the unreferenced TIFF in.

- Click Add Control Points. The control points will help move the photo to where it is supposed to be.

- With the control points click somewhere on the orthophoto, then click on the satellite image in ArcMap where the point in the unreferenced TIFF should be. Keep adding control points until the photo is referenced. The edges of the image will be distorted. Don't spend too much time adding control points there.

- The next step is to save the georeferenced image. Click on Georeferencing in the toolbar. Then click Rectify from the drop down menu. You can save it wherever you need it.

GeoSetter

32 images were chosen from the camera that also contained geospatial data using GeoSetter and following the instructions from Drew Briske shown below.

Geosetter Directions

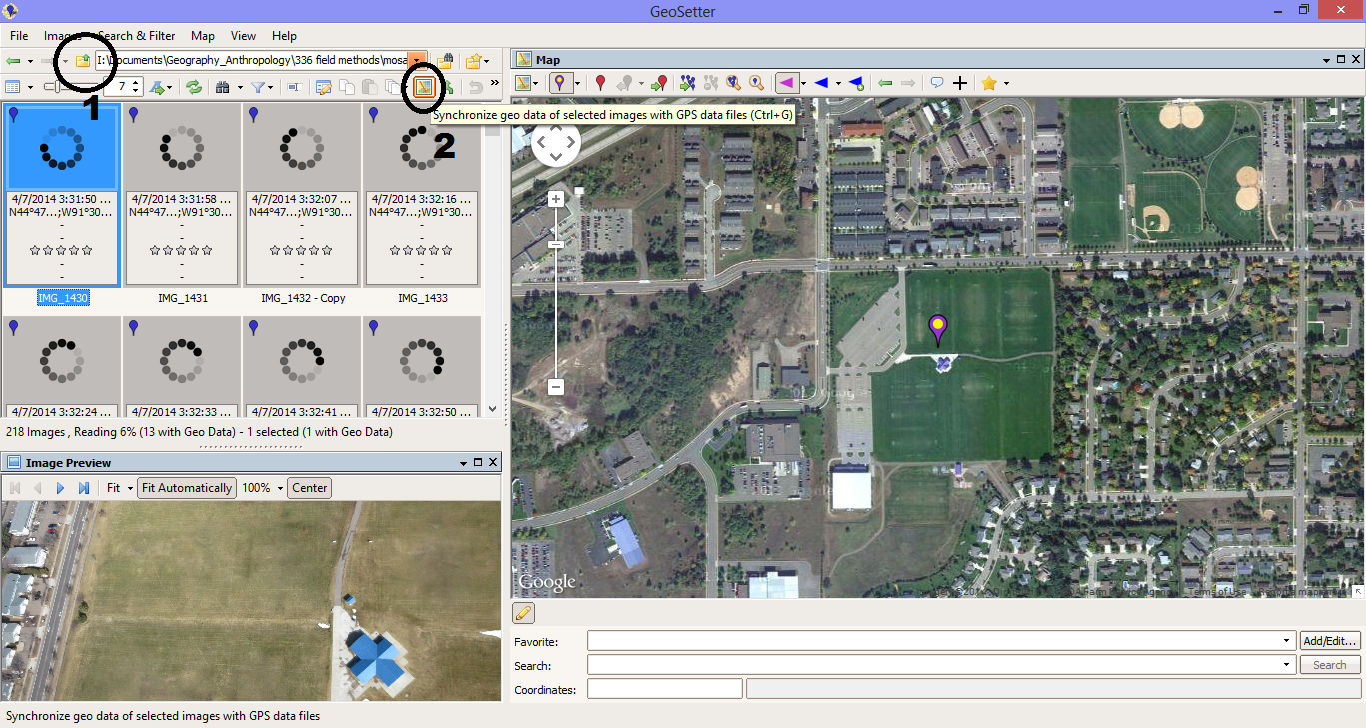

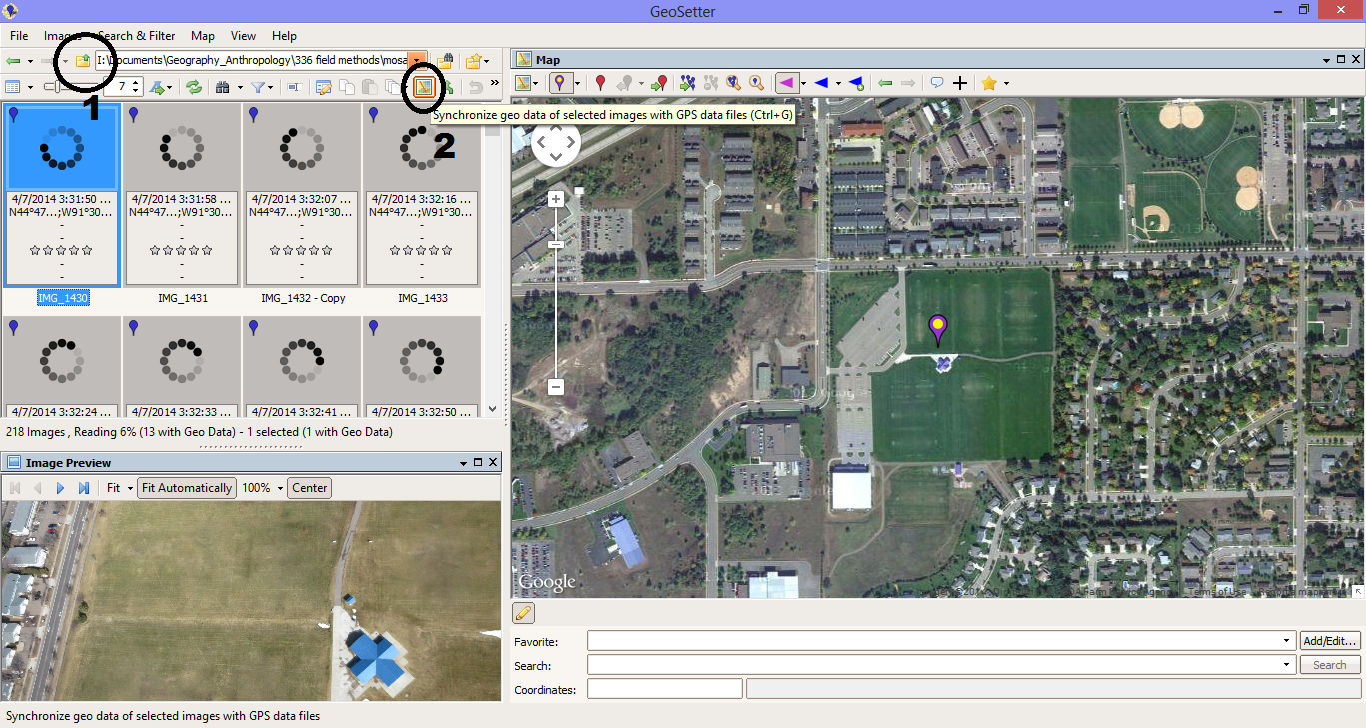

1. First you will need to open the images that you will want to use. The photos will go into the viewer box on the left side of the screen. Look at all the photos and make sure there are not any blue markers on them. If they have black/grey they have lat/long attached to them.

2. Click the icon circled and labeled 2 in figure 11. This allows you to select the tracklog that you want to embed in the images.

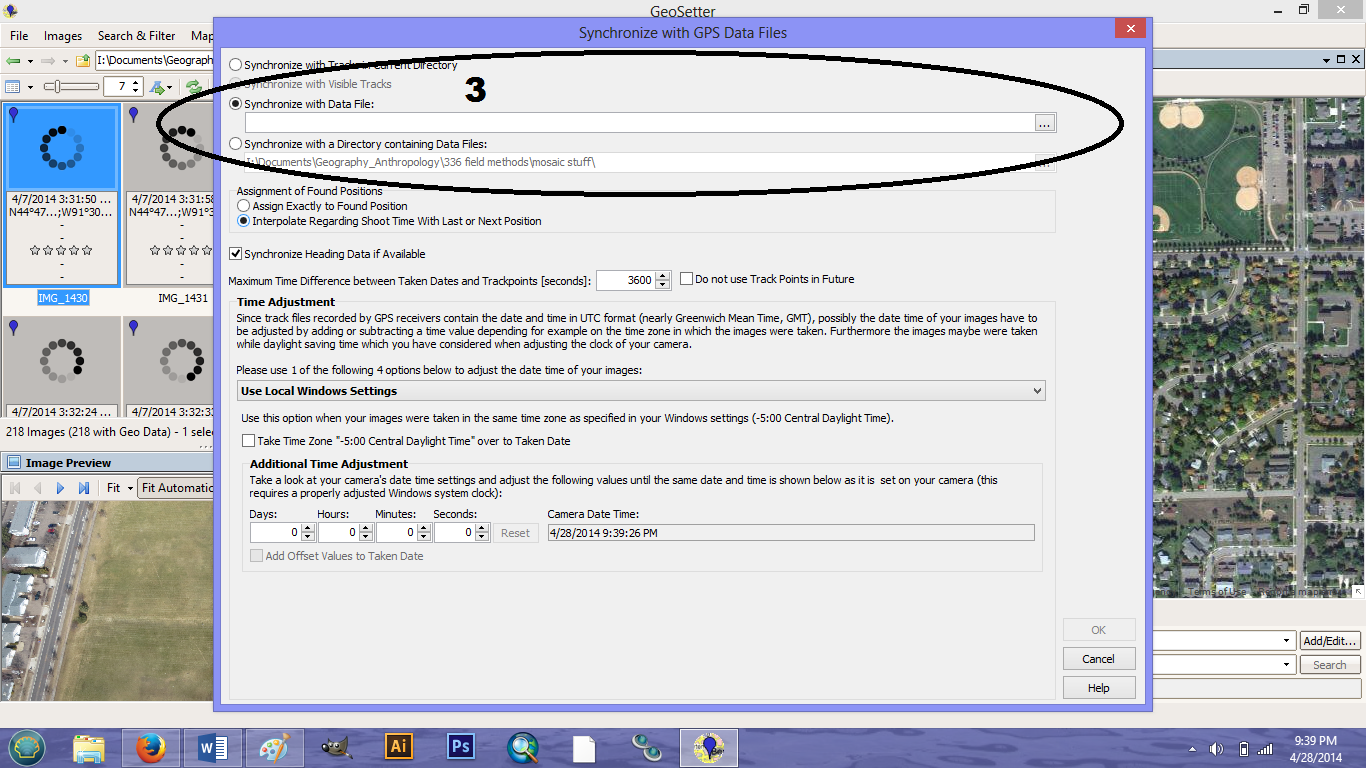

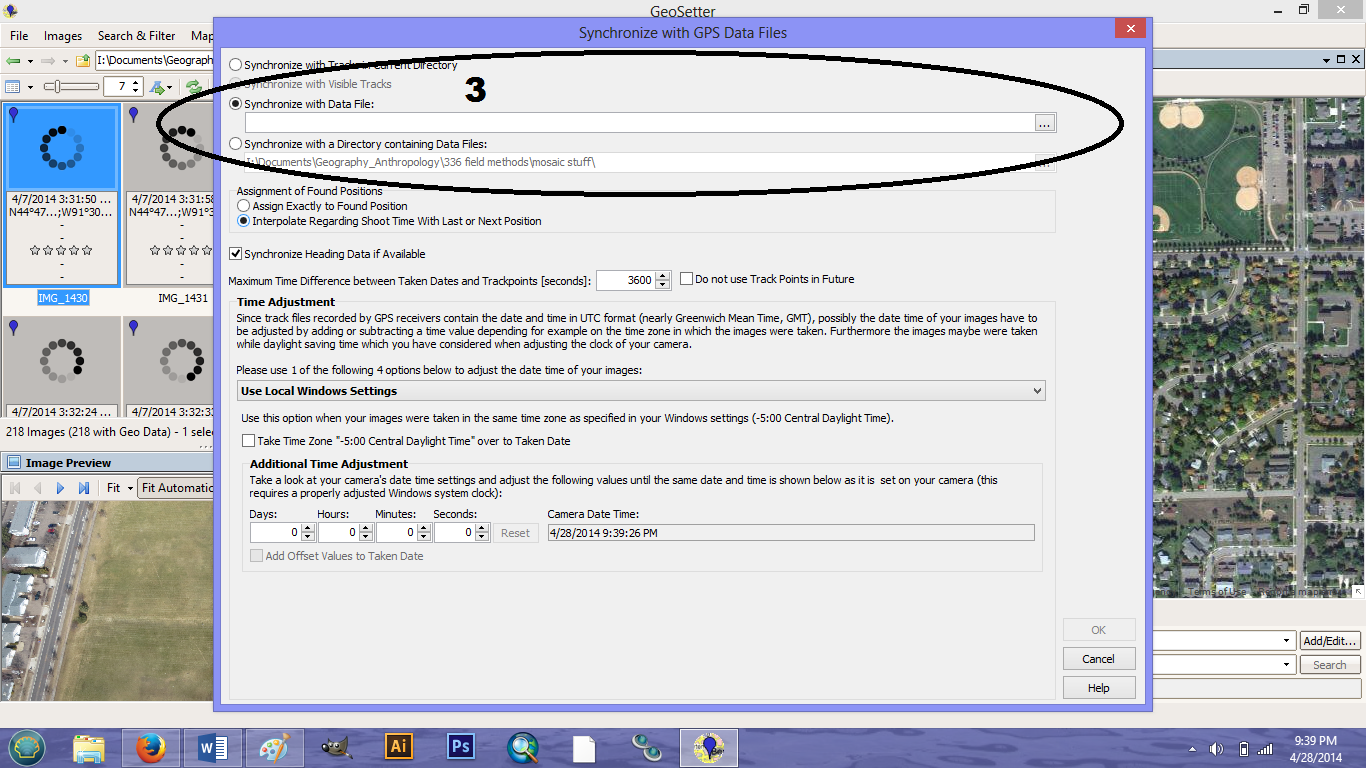

3. A window will open. Click Synchronize with Data File. Input the GPX track log (figure 11).

|

| Figure 11. The GeoSetter interface. |

|

| Figure 12. Embedding the tracklog by time into the images. |

4. To save the images simply close out of the program. A prompt will ask if you would like to save your changes. Click yes. This will save the coordinates on the images.

Results:

Rotary UAS

Figures 13-15 are images captured during the fixed-wing UAS flight. Obviously, the image quality is less than ideal, but this is not the direct result of camera quality or UAS distortion. Rather, the weather was overcast the day the mission took place. These images could be enhanced if they were needed for something crucial. For our purposes, the images were just to show the capabilities for capturing imagery using the UAS.

Figure 13: This figure shows our base of operations at the Eau Claire Soccer Park

Figure 14: The pavilion serving as our base of operations in the distance is visible in this picture only because the UAS was turning at the time. The camera is set up to capture "straight-down" images of what is directly below the UAS

Figure 15: This figure captures class members in the field watching UAS flight. You can see the potential for the UAS to capture patterns on the ground such as the lines on the field in this picture.

Balloon Launch/Mosaic

As mentioned in the methods section, a mosaic was created using approximately 200 images from the balloon launch. This was done for each camera. While this took longer to process (roughly 3 hours) the result is an image with higher resolution. If you wanted to, you could use fewer images with the result being a lower resolution image. It just depends on the purpose of your mission.

Our first mosaic image (as seen in Figure 16) had one small problem. Because the images from this camera were not geotagged as the photos were taken during the mission, they were not georeferenced. The resulting image would need to be, effectively flipped over for features to be in their actual location. To fix this, Photoshop was used, and everything was georeferenced in ArcMap.

Figure 16: This mosaic image has a completely opposite orientation compared to the actual location of each feature. If this visual could be turned over like a piece of paper, features would be in the correct location.

Our second image (as seen in Figure 17) used approximately 180 images from the digital camera that did geotag each image. As a result, the orientation of this mosaic is correct. You will notice this image is quite a bit darker than the first. This could be due to a camera setting or some other aspect of the camera.

Figure 17: This mosaic image was created using geotagged photos from the second camera. As a result, the orientation of this image is correct.

This next image is very interesting; it shows the overlap of the images used in the mosaic. This information is from the camera that was able to geotag images. The more overlap their is, the more likely that the imagery shown is accurate. If you look at figure 17 very closely you will notice a slight increase in distortion on the edges of the images moving from the light blue area out. Most likely this will not be a huge concern unless your project requires a high level of accuracy.

The black dots on the map show the exact location where each image was captured from. This would also be a way for you to track the aerial path used to capture images.

Figure 18: This figure shows the camera locations and image overlap for the mosaic image seen in figure 17

Finally, a digital elevation model was created of our mosaic image from the geotagged photos. Comparing this image to Figure 17, you will notice the bright yellow patches in the bottom left of this photo correspond to a couple of large buildings, while the dark blue patches correspond to baseball and soccer fields which are generally flat. This model appears to do a reasonable job displaying the elevation data of our study area. No doubt, the inclusion of more imagery would result in an even more accurate representation.

Figure 19: This figure is a digital elevation model of the mosaicked image from our geotagged photos.

Discussion:

The mosaic process is really not that bad once you have learned the steps. The main thing to keep in mind is that, if your images are not geotagged when they are taken, the resulting mosaic will be a mirror image of actual locations until you georeference them. It would be highly advisable to capture geotagged images if you know the purpose is to create a mosaic.

I think most people would have a very difficult time noticing the resulting images from this exercise were anything but a single image. The mosaicking process has the capability to create nearly seemless images of a study area. Perhaps this would be different in an area with greater variation.

Only on the outer fringes where there is less image overlap does some minor distortion occur. Without close inspection, the only way this would be noticed is by looking at a DEM. In our DEM the fringes at the bottom of the image seem to indicate a ridge of higher elevation compared to the rest of the photo. This is not the case at all. By simply clipping the image to remove inaccurate areas this problem could be solved if it was a necessary feature of your mission.

Conclusion:

As you can see from the few photos shown above, the potential uses of UAS to provide unique data in almost every facet of commercial, industrial, and governmental operations are endless. Going forward, those who take the time to understand how to carry out a successful mission with safety, efficiency, and efficacy will be the beneficiary of lucrative opportunities in this field.

Don't be afraid to think outside of the box when it comes to UAV methods. This is wide open field that has not yet located the best ways to accomplish each kind of mission. There is room for experimentation for those willing to be creative.